The Clock Stops.

The Resonance Begins.

An experimental protocol for semantic event computing. Early evidence for bandwidth compression and cross-architecture transfer. Not production-ready.

Protocol name: Semantic Event Protocol (SEP)

We publish our results and reference code to invite scrutiny and collaboration.

Name Clarification: "Resonance" is the project name. The actual protocol is the Semantic Event Protocol (SEP). There are other unrelated projects with similar names—this is SEP at resonanceprotocol.org by Nikolay Yudin.

A research project by Nikolay Yudin

The Problem

AI is becoming critical infrastructure. And it's controlled by 3 companies in 1 country.

The Monopoly

- ▸NVIDIA controls the hardware

- ▸USA controls NVIDIA (export restrictions on chips)

- ▸OpenAI, Anthropic, Google control the top models

- ▸Everyone else is just a customer — with a kill switch

The Economics

Training cost for a GPT-4 class model

Goes to GPU compute — thousands of H100s for months

The gap is growing exponentially. This is not about money — it's about control.

The Real Risk

"Turn off your API" = digital blockade.

AI is becoming like oil in the 20th century. Except you can't drill for it.

We don't think "catching up" is the answer.

We think the paradigm itself is wrong.

The Shift

From Time-Based to Meaning-Based Computing

OLD PARADIGM

"Compute every nanosecond, whether needed or not."

Result: Energy waste, heat, latency.

NEW PARADIGM

"Compute only when meaning changes."

Result: Native efficiency, sparsity, instant reflex.

Research Results

What We've Demonstrated in Small-Scale Experiments

Preliminary results from controlled benchmarks. Single author, no external replication yet.

These findings suggest promising directions, but require independent validation before production use.

HDC Compression

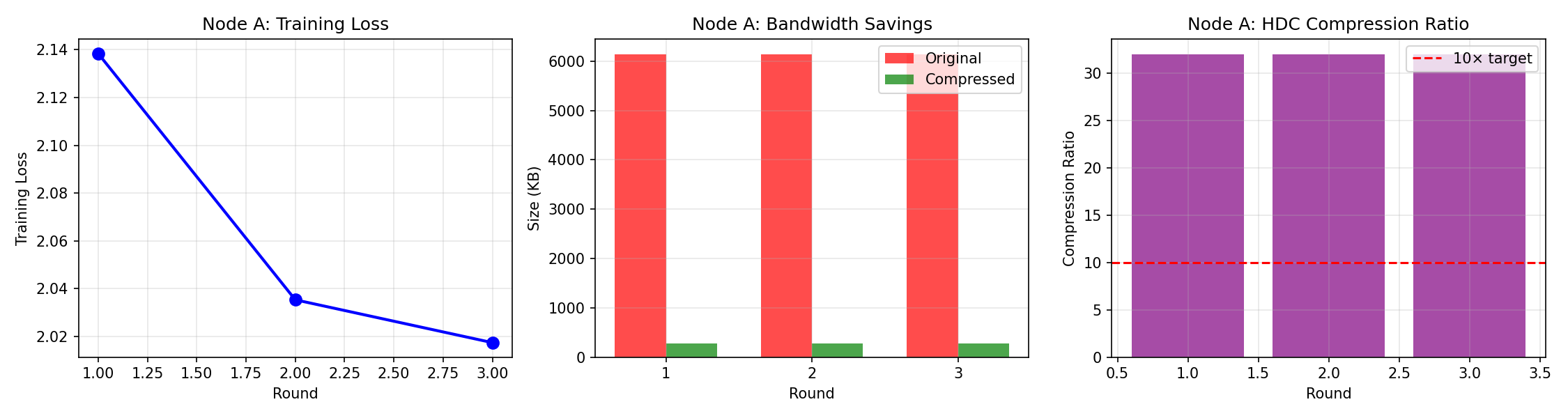

Distributed training bandwidth reduced from 17MB to 271KB per sync using ternary quantization.

Knowledge Transfer

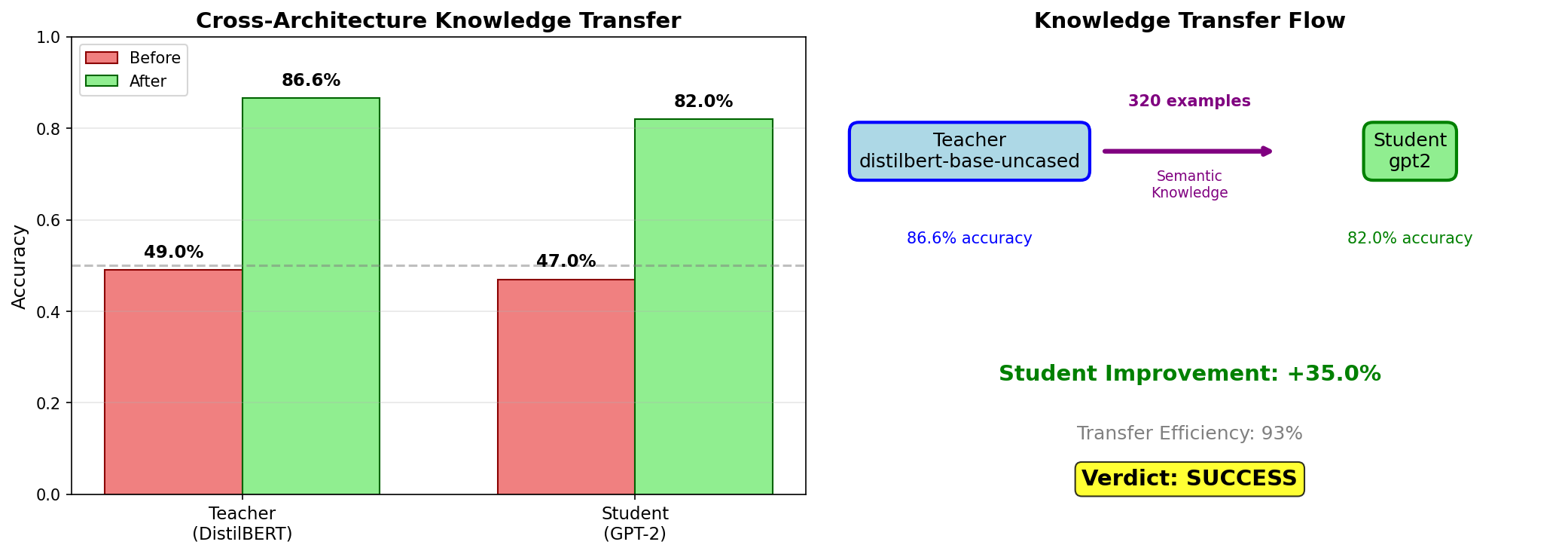

Cross-architecture transfer efficiency. DistilBERT → GPT-2 via semantic examples, not weights.

HDC Generalization

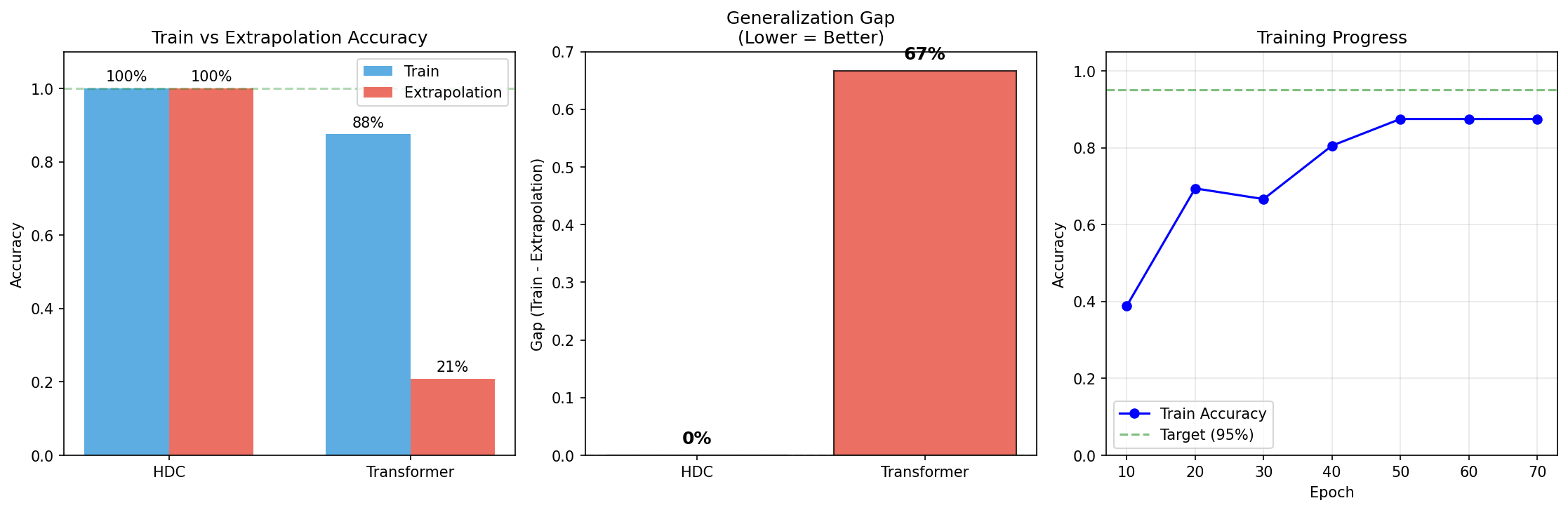

Perfect compositional generalization where Transformers achieve only 21% on unseen combinations.

Potential Implications (If Validated at Scale)

Heterogeneous Networks

In our controlled tests, different models could share knowledge without identical architectures.

Edge-Viable Bandwidth

271KB per sync could work on 3G networks. Scaling to production edge environments requires further validation.

Structural Properties

HDC shows compositional behavior in toy tasks. Whether this holds for safety-critical systems is an open research question.

The Four Axioms

Intelligence is Air

Intelligence should be a property of the environment, not a privilege of the few. It must be ubiquitous, invisible, and accessible like oxygen.

Energy > Compute

Efficiency is the ultimate benchmark. If a system consumes gigawatts to think, it is not intelligent; it is just a furnace.

Distribution > Control

Robustness comes from the mesh, not the monolith. A centralized brain is a single point of failure and control.

Privacy is Physics

Data sovereignty must be guaranteed by hardware constraints (air-gapped semantics), not by legal promises or user agreements.

Honest Status

What Works Today vs What We're Researching

Experimentally Proven

Distributed Training

M3a ✓Two nodes trained shared model via Firebase. Loss converged identically.

HDC Compression (32×)

M3b ✓17MB → 271KB per sync. Ternary quantization + 2-bit packing.

Cross-Architecture Transfer (93%)

M3c′ ✓DistilBERT → GPT-2 knowledge transfer via semantic examples.

Compositional Generalization

M2.6 ✓HDC 100% vs Transformer 21% on unseen combinations.

Researching

Distributed training on edge

RESEARCHDiLoCo, Hivemind show promise. Not production-ready.

Ternary computing (10-100×)

WAITINGBitNet works. Waiting for ternary hardware.

Semantic training efficiency

SPECULATIONWorks for inference, not proven for training yet.

Governance mechanisms

DESIGN"No one controls" needs real mechanism design.

Our Bet

New hardware is coming: memristors, neuromorphic chips, in-memory computing.

When it arrives, the economics of AI will flip. Datacenters won't be the only way.

We're building the architecture for that future — one that works today and scales exponentially tomorrow.

Future History

Artifacts from 2030

STATUS: RESONANT

Local Intelligence, Global Outage

"For six hours, every major cloud provider went dark. No LLM APIs, no centralized vision services, no auth tokens. Dashboards screamed, markets panicked — but the systems that mattered didn't. Ports kept routing containers. Hospital triage lines kept moving. Traffic lights adapted to real flows. None of these systems were calling home. Their models lived at the edge; their decisions emerged from local semantic events, not remote inference calls. For the first time, the cloud disappeared — and the world did not."

Public Safety Without Surveillance

"Ten years ago, the metropolis was blanketed with CCTV — a nervous system wired straight into storage. Today there are no 'cameras' in the old sense. Intersections still see. Stations still notice. Streets still react. But nothing is recorded, nothing uploaded, nothing stored. Hardware enforces it: only semantic events exist, and they decay on-device. A fight starts? Local nodes trigger light, sound and nearby responders. A lost child? The mesh quietly guides parents — without ever building a face database. Crime went down. Data hoarding fell to zero. The city became attentive, not voyeuristic."

30-Second Semantic Medicine

"People used to wear 'health trackers' that streamed everything to the cloud. It was convenient, but it was surveillance. The new generation tracks nothing. No continuous recording, no streaming. A tiny semantic model runs on-skin. Millions of micro-signals stay local. When patterns drift into meaningful territory — a perfusion mismatch, a metabolic stress vector — the wearable emits a single semantic event. Not data, meaning: 'Act Now'. You walk into a clinic. No upload. Your wearable and the room's mesh converge on a diagnosis in thirty seconds. The device never gives away your secrets. It never even had them."

Endangered Tongues, Embedded in Silicon

"There used to be a joke: 'If it's not in the cloud, it doesn't exist.' That joke killed a thousand languages. No venture-backed model would ever be trained on a village that couldn't pay. With Resonance, the direction flipped. A handful of solar-powered nodes, deep in a mountain valley, listened and learned locally for years — never uploading a byte. Children spoke to grandparents; the mesh distilled patterns into a tiny semantic core that lives only there. Now when a child speaks in the dominant language, the answer arrives in the ancestral one — instantly, offline. No cloud owns it. No corporation can deprecate the API. As long as the village keeps its devices alive, the language remains alive — not as an archive, but as a living interface."

The Demo We're Building

"The Box in the Café"

Imagine this scene:

Someone asks ChatGPT through their browser

You ask your small device — same quality answer

"Now turn off the internet."

Their ChatGPT is dead. Your box still answers.

But wait — the box is too small to hold a full model.

Watch the answer grow as neighboring nodes contribute through the mesh. Each device adds what it knows. Together, they're smarter than any single node.

Intelligence ≠ datacenter

Works offline

Cannot be shut down

No one controls it

This isn't ready yet. But it's what we're building toward.

Join Us

We're looking for people who see the problem and want to build the alternative.

This is a research project, not a startup. We're not promising quick returns.

Engineers

Extend the reference implementation, experiment with edge hardware, optimize protocols.

Researchers

Distributed training, ternary computing, hyperdimensional computing, governance design.

Connectors

Know someone at a sovereign wealth fund? A European AI initiative? A research lab working on memristors?

How to Start

We're not trying to beat OpenAI at their game. We're changing the game.